Alphinity has partnered with CSIRO, Australia’s premier national scientific research agency, to develop a Responsible AI (R-AI) Framework for investors that will be published in a joint report later this year. The scoping assessment and insights outlined in this note will be used to inform this broader R-AI project. Further information and insights from the completion of this project will be published throughout the year.

Introduction

There are many benefits to artificial intelligence (AI): it can make fast work of huge amounts of data, speed up processes, and collaborate with humans to provide a better outcome than either could create on its own. However, if not done responsibly, the development of AI technology can come with significant risks.

The launch of ChatGPT has led to an explosion of attention and commentary on the topic in recent months. It has been celebrated because of its ability to instantaneously consolidate research and construct text as a person might, potentially saving huge amount of time for students, researchers and writers. But, at the same time, it has been criticised for not always having up to date or correct information, threatening other parties’ Intellectual Property, and posing a threat to jobs and industries.

In 2022, at least 35% of companies report using AI in their business, and a further 42% reported they are exploring AI (source: IBM Global AI Adoption Index 2022). In our view, looking at the risks associated with AI through just a technology lens, this only tells part of the story. We also need to look closely at the users of AI and examine ways in which it might present business-related threats and opportunities, or have broader system wide impacts on certain sectors.

Scoping assessment to identify risks by sector

To help us better understand the various threats and opportunities for AI developers and users, we have completed a scoping exercise to identify the range of AI applications, threats and opportunities, and materiality of ESG considerations by sector.

The outcomes of this assessment will help guide further analysis for particular sectors and identify focus areas for our research project with CSIRO.

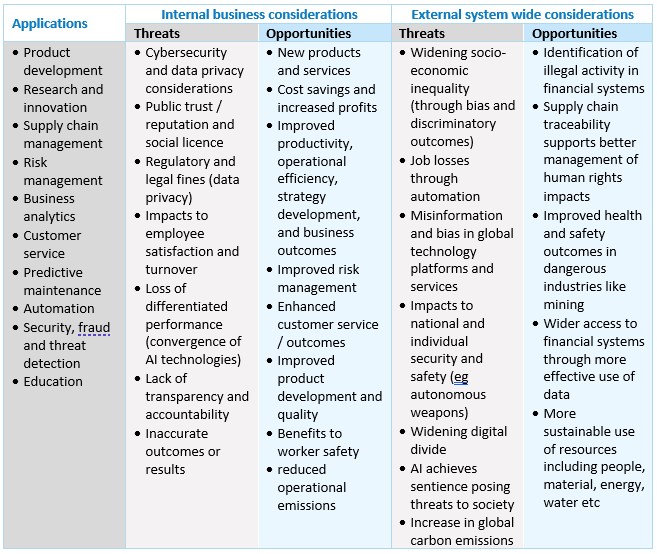

We identified threats and opportunities across two categories:

- Internal business considerations: threats and opportunities which could impact individual businesses directly

- External system wide considerations: threats and opportunities which could have a wider implication in terms of national or global systems, sectors, communities, or the environment

Scoping assessment outcomes

Although companies in the technology sector will likely experience the most significant risks, and equally may cause the most important system wide impacts, many other companies and sectors will also be materially affected by AI.

Risks associated with cyber security and data privacy are universal across all sectors. However, opportunities to do with worker safety and asset optimisation are quite specific to businesses in certain sectors like industrials, materials and communications. However, threats associated with public trust, social licence, and bias are most relevant to companies with customer or community facing services such as those in the financials, healthcare and consumer sectors.

Threats associated with sentience are relevant and material to all sectors, however sectors which are developers of AI have the most control and influence over this issue. Although we expect that most sectors would have some companies which develop AI, this would be most prevalent within the technology and industrials sectors.

-

Applications, threats and opportunities

The following table summarises the primary applications, internal business considerations, and external system-wide considerations that we found across sectors.

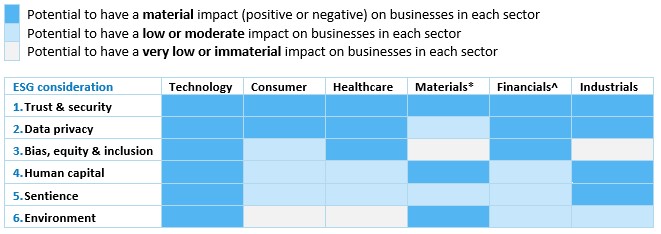

Materiality of ESG considerations by sector

Looking at AI threats and opportunities through a difference lens, we have identified six core ESG considerations we assess as relevant to most sectors. We have considered the materiality of each ESG consideration for all sectors and categorised them as either; very low or immaterial, low or moderately material or very material.

The below table presents a summary of this materiality assessment for six key sectors.

* The communications and energy sectors are assessed similarly to materials

^ Financials includes banks, insurers, and diversified financials

- Trust & security: Concerns about AI security, accuracy, accountability, transparency and reliability all create risks associated with trust. Right now, big data and AI is seen as a black box. It is very difficult to trace ‘decision making’, investigate errors or issues, or explain the various workings of AI algorithms to many of its users. For AI to work properly, significant time is needed to ensure algorithms are trained, tested, kept up to date, and adequately secure. These are things the technology sector is of course working to manage, however as mass use of AI is rolled out, we expect that there will be different types of issues, errors, and potentially significant controversies for some businesses. These events can negatively impact a company’s social licence, lead to legal consequences, and ultimately hinder the roll out and uptake of AI more broadly.

- Data privacy: More data means more opportunities for cyber security and data privacy breaches. The use of big data and analytics will also bring together a range of datasets that may or may not have been used by businesses in the past. This change could create ethical and legal issues related to data privacy, fraud and security, and consent. A good example of this is the use of ‘trace data’ (eg social media information) in health research which blurs the line under health-related data privacy laws. Another example is the use of customer data for marketing purposes. Many consumers believe that this is a breach of customer privacy and consent, and creates a dilemma for businesses who are trying balance the drive to improve marketing and also manage customer expectations.

- Bias, equity & inclusion: It’s well recognised that the use of AI could have positive and negative consequences for different groups in society. This is often referred to as bias. It is generally accepted that these biased outputs typically follow classical societal biases like race, gender, biological sex, nationality or age and are as a result of biased inputs or datasets. There are also potential positive outcomes for inclusion from the use of AI. For example, the use of AI in financial services can improve access through faster more accurate processing times for applications, also using AI and automation in healthcare could enable clinicians to deliver diagnostic services remotely.

- Human capital: The increased use of AI to automate and simplify repetitive or manual tasks can improve employee satisfaction, help manage labour shortages, enhance training and outcomes, and improve worker safety significantly, especially in high-risk environments like mining. However, this could also lead to significant job losses globally which will most likely have a disproportionate impact on people in minority groups and people who are in lower paid roles that are already struggling in terms of financial security and work opportunities.

- Sentience: The ultimate big picture risk associated with AI is sentience. AI becomes sentient when it achieves the intelligence to think, feel, and perceive the physical world around it just as humans do. We have flagged this as material for all sectors, however it is most material for the technology and industrial sectors since those companies will be responsible for the design and development of most AI systems.

- Environment: Significant amounts of energy is required to train and run AI models. The materiality of this issue depends on the source of the energy used and is most relevant to businesses in the technology sector which will build and run these models. For the Materials sector (primarily mining and building products), this issue is pertinent because of the potential improvement in resource management through asset optimisation, automation, and operational efficiency.

Questions to ask companies

The application of and risks associated with AI are extremely complex. As an initial step, good questions to ask companies are: how much is being invested in AI related products and technology? Is your company a developer, user of both? What governance structures are in place to ensure AI is being applied responsibly? What are the primary ESG related considerations (using the list above) and how are these being managed? Does the company have an R-AI policy? What metrics do the management team use to track the implementation of this policy? What are the longer-term implications of AI for the business or sector, and how are these consciously being addressed?

Summary

There are many benefits to AI. It can make fast work of huge amounts of data, speed up processes, and collaborate with humans to provide a better outcome than either could create on their own. However, if not done responsibly, the development of AI technology can come with significant risks.

To help us better understand the various threats and opportunities for AI developers and users, we have completed a scoping exercise to identify the range of AI applications, threats and opportunities, and materiality of ESG considerations by sector.

This exercise has demonstrated that:

- Almost all sectors are expected to use AI to some extent. The range of applications varies from business risk management, customer service, predictive maintenance, to product research and development.

- Although companies in the technology sector may experience the most significant risks, and equally may cause the most important system wide impacts, many other companies and sectors will also be materially affected by AI.

- There are seven categories of ESG considerations we can use to assess threats and opportunities across all sectors. These are equity and inclusion, human capital, data privacy, cyber security, trust, environment and sentience.

We believe that most of the companies in our portfolios will have some level of exposure to AI. Our analysis has reinforced the need to establish a clear framework we can use to assess the governance, design and application of AI products for developers and users so that this exciting technology is used responsibly.

Keep an eye out for updates on our R-AI Framework project with CSIRO. We will publish insights as we progress throughout this year.

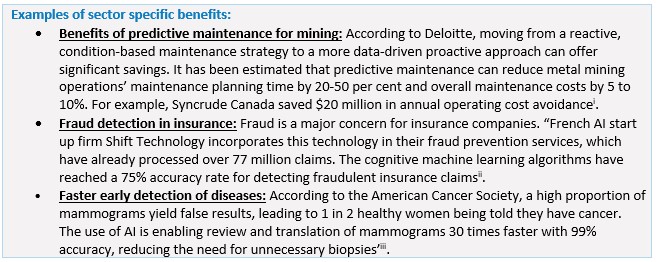

[i] What the mining and metals industry can gain from predictive analytics (australianmining.com.au)

[ii] Insurance Fraud Prevention Gets Help From Artificial Intelligence (samsung.com)

[iii] AI and robotics are transforming healthcare: Why AI and robotics will define New Health: Publications: Healthcare: Industries: PwC

Author: Jessica Cairns, Head of ESG & Sustainability